Annual Audit Manual

COPYRIGHT NOTICE — This document is intended for internal use. It cannot be distributed to or reproduced by third parties without prior written permission from the Copyright Coordinator for the Office of the Auditor General of Canada. This includes email, fax, mail and hand delivery, or use of any other method of distribution or reproduction. CPA Canada Handbook sections and excerpts are reproduced herein for your non-commercial use with the permission of The Chartered Professional Accountants of Canada (“CPA Canada”). These may not be modified, copied or distributed in any form as this would infringe CPA Canada’s copyright. Reproduced, with permission, from the CPA Canada Handbook, The Chartered Professional Accountants of Canada, Toronto, Canada.

4028.4 Testing IT dependencies with and without ITGC reliance

Sep-2022

See OAG Audit 6054 for guidance on testing automated controls and calculations.

CAS Requirement

When using information produced by the entity, the auditor shall evaluate whether the information is sufficiently reliable for the auditor's purposes, including, as necessary in the circumstances (CAS 500.9):

(a) Obtaining audit evidence about the accuracy and completeness of the information; and

(b) Evaluating whether the information is sufficiently precise and detailed for the auditor's purposes.

OAG Guidance

Introduction

In many entities, much of the information used for carrying out activities within the entity’s process to monitor the system of internal controls and other manual and automated controls will be produced by the entity’s information system. Any errors or omissions in the information could render such controls ineffective.

In addition, when executing substantive procedures we often use information generated by an entity’s IT applications. If this information is inaccurate or incomplete, our audit evidence may also be inaccurate or incomplete.

For these reasons our audit plan includes procedures to address the reliability of information used:

-

In the operation of relevant controls we plan to rely on, and/or

-

As the basis for our substantive testing procedures, including substantive analytical procedures and tests of details.

We obtain an understanding of the sources of the information that management uses and determine the nature and extent of our testing of the reliability of this information.

Determining the nature and extent of evidence needed to assess the reliability of information used in the audit is a matter of professional judgment, impacted by factors such as:

-

The level of audit evidence we are seeking from the control or substantive testing procedure.

-

The risk of material misstatement associated with the related FSLI(s).

-

The degree to which the effectiveness of the control or the design of the substantive test depends on the completeness and accuracy of the information.

-

The nature, source and complexity of the information, including the degree to which the reliability of the information depends on other controls (i.e., manual or automated information processing controls and/or ITGCs).

-

Prior audit knowledge relevant to the assessment (i.e., prior year control deficiencies, changes in the control environment, or other assessed risks).

Additional factors for consideration include the complexity of the application and the type of the information, as illustrated below:

| Less Persuasive Evidence Needed | Factors | More Persuasive Evidence Needed |

|---|---|---|

| Non‑complex application | Complexity of application | Complex application |

| Standard report | Report Type | Ad‑hoc Query |

| Seeking lower level of evidence from testing | How the Report is Used in the Audit | Seeking higher level of evidence from testing |

Understand the Nature and Source of Underlying Information

In order to plan our approach for assessing the reliability of underlying information used in the audit, we first need to consider the nature, source and complexity of the information itself. We typically utilize information generated by an IT applications in the form of report (also referred to as “system generated reports”). The types of reports we may identify are as follows:

| Type of report | Description |

|---|---|

| Standard Report | A report designed by an external software developer that cannot be modified or customized by the entity. Standard reports are reports that come preconfigured and/or predefined in well‑established software packages. Many applications, such as SAP and JD Edwards, provide standard reports, such as accounts receivable aging reports, irrespective of the type of entity. |

| Customized Report | A modified standard report or a report developed to meet the specific need of the user. Custom reports allow an entity to design the information included in the report and how it is presented. For example, management may modify a standard accounts receivable aging report to show aging by customer instead of invoice, which would then become a custom report. Reports generated from an entity‑developed (“in‑house”) application are considered to be custom reports. Entity end‑users are not typically able to create or modify customized reports, as customization generally needs to be done by the application vendor or application administrator. |

| Query | A report generated ad hoc or on a recurring basis that allows users to define a set of criteria to generate specific results. Queries are common when information is needed for a user defined item, transaction, or group of items or transactions. For example, a query could be used to generate a list of all journal entries for a defined business unit. Query languages (e.g., SQL, SAS) may be used by IT or sophisticated users to design these reports. Recurring queries subject to ITGCs may have similar characteristics to a custom report. Such characteristics may include queries that cannot be modified other than by authorized individuals once they are developed, tested, and placed into production. Ad hoc queries are typically performed for a specific use and likely are not subject to ITGCs. Information generated by an application used in substantive testing (e.g., populations used for making selections for substantive tests of details or data for use in a disaggregated analytical procedure) may be the result of ad hoc queries. |

Information generated by an IT application can also be extracted to modifiable formats (e.g., spreadsheet). Refer to OAG Audit 2051 for considerations related to spreadsheets and other modifiable formats such as Microsoft Access.

Determining the type of report, including whether the report is a standard report, is dependent on entity specific facts and circumstances and requires judgment. Consider the need to involve an IT audit specialist in determining the nature of the different types of reports to be used in the audit. For example, many applications provide the ability to generate customized reports that may appear to be very similar to standard reports also available in the application. We need to distinguish between the two because it may impact the nature and extent of audit procedures needed to test the reliability of the information provided by the reports.

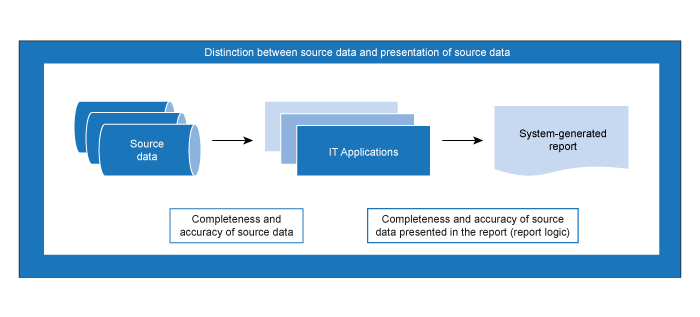

Information generated by an IT application—Testing approach—Completeness and accuracy of the source data

Testing the operating effectiveness of information processing controls is often an effective and efficient approach to obtaining audit evidence over the completeness and accuracy of the source data. An example of such an information processing control is a completeness control that involves the use of pre‑numbered documents. As transactions are input, missing and duplicate document numbers are identified and pulled into an exception report for follow‑up and resolution.

Although the guidance within this section references testing of “ITGCs and information processing controls”, obtaining audit evidence over the completeness and accuracy of source data can also involve agreeing the data (e.g., individual transactions) back to underlying source documents and tracing source documents into the data, or testing other relevant manual controls over the source data. In some cases, the completeness and accuracy of the underlying source data will be tested as part of our audit procedures over a particular process or account. As such, we may be able to leverage work already performed over the source data as part of the audit.

Where our testing approach over source data includes testing of operating effectiveness of information processing controls, we consider whether it is appropriate to apply the guidance in OAG Audit 6056.

The references to testing “ITGCs and information processing controls” throughout this guidance are intended as a general reference and a reminder that as part of testing the completeness and accuracy of information in a report we need to obtain evidence that the underlying source data is complete and accurate. The remainder of the testing guidance below does not address the completeness and accuracy of the underlying source data and instead focuses on testing the completeness and accuracy of the source data presented in the report.

Information generated by an IT application—Testing approach—Completeness and accuracy of the source data presented in the report (report logic)

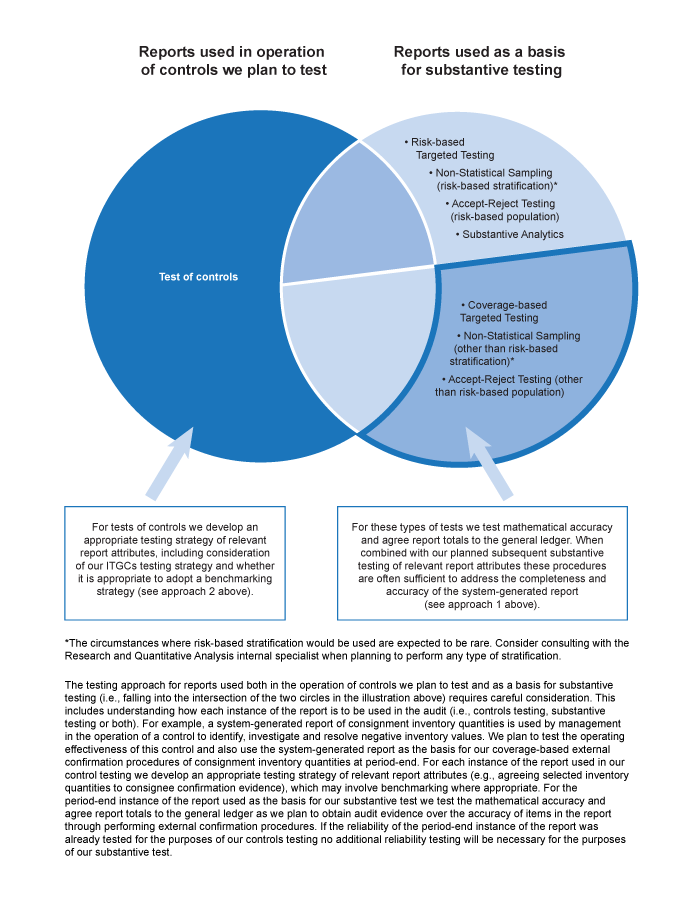

Depending on the nature of the information we will be using from the report, we test relevant report attributes by adopting one of the following two approaches:

We first consider whether the information generated by an IT application:

-

is to be used as the basis for a substantive test of details; and

-

does not include any attributes that are being relied upon in selecting items for testing (other than where monetary values are used for coverage‑based selection) as illustrated in the examples and chart below.

Where both a) and b) apply, we test the mathematical accuracy of the information and agree totals from the information generated by an application to the general ledger. When combined with our planned test of details, these procedures are often sufficient to address the completeness and accuracy of the system‑generated report.

For example, where we plan to substantively test additions to property, plant and equipment by targeting items based on monetary value selected from a system‑generated report, we can obtain evidence of the completeness of the report by testing the mathematical accuracy of the report total and agreeing the total additions from the report to the property and equipment movement schedule, testing the mathematical accuracy of the movement schedule and then agreeing the total property, plant and equipment reflected on the movement schedule to the general ledger. We would then typically obtain evidence of the accuracy of the system‑generated report when we perform substantive testing over the selected additions (i.e. we can leverage our substantive testing to address accuracy of the additions included in system‑generated report).

The testing approach described above for system‑generated information meeting conditions a) and b) may still be appropriate in circumstances where we do not test ITGCs or determine that ITGCs are not operating effectively.

Where the information generated by the IT application is not of the nature described in the first approach described above (e.g., a report detailing property, plant and equipment additions by location, where location is relevant to the design of a control being tested, or as a basis for selecting items to substantively test as part of a risk based targeted test) we need to determine whether testing of the ITGCs and application controls related to the application generating the information is expected to be efficient and/or effective.

-

Where testing of the ITGCs and information processing controls is not expected to be efficient and/or effective, in addition to testing the mathematical accuracy of the information and agreeing totals from the report to the general ledger (if applicable), we also need to perform appropriate substantive procedures to test the completeness and accuracy of the information for each instance of the information used in the audit (see later guidance on the nature of testing to be performed).

-

Where testing of the ITGCs and information processing controls is expected to be efficient and effective, in addition to agreeing totals from the information to the general ledger (if applicable), we adopt one of the following approaches to address completeness and accuracy of the information:

-

a benchmarking approach, where permitted (see section G of the guidance to the decision tree below for an explanation of a benchmarking approach and the circumstances where this is appropriate), or

-

Perform testing of mathematical accuracy, if applicable, and appropriate substantive testing. This will include substantive testing of at least a single instance, regardless of the number of instances of the information to be used in the audit (see section I of the guidance to the decision tree for typical substantive testing approaches).

-

The illustration below summarizes the types of testing procedures that may utilize system‑generated reports and whether we would typically test reliability using approach 1) or 2) above.

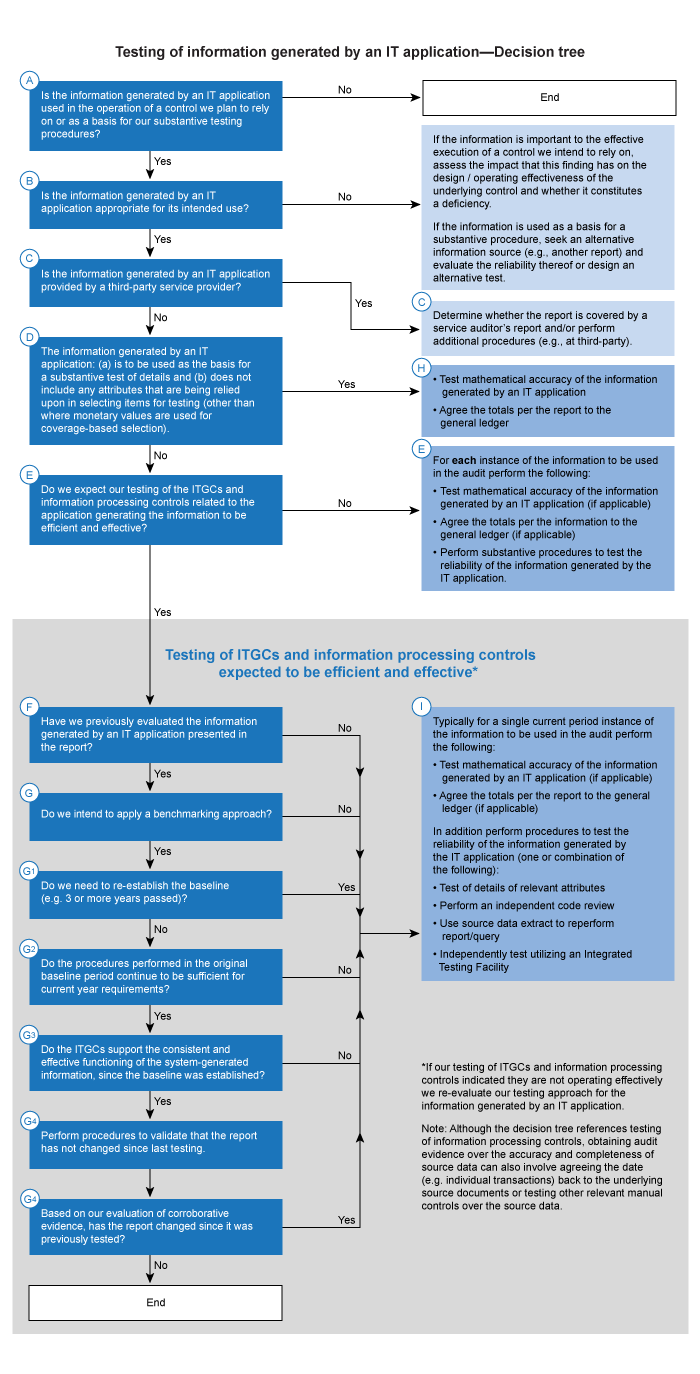

Testing of information generated by an IT application—Decision tree

The testing approaches described above are illustrated in the decision tree below. The decision tree is accompanied by supporting guidance for each step in the process.

Decision tree steps and related guidance

(A) Is the information generated by an IT application used in the operation of a control we plan to rely on or as a basis for our substantive testing procedures?

As part of our audit procedures we might identify information generated by an IT application at any phase of the audit, but it typically occurs when obtaining an understanding and evaluating the design effectiveness of controls that address risks of material misstatement at the assertion level and that are in the control activities component (OAG Audit 5035.1) or when planning and performing substantive procedures.

Once a report has been identified as being used in, and important to, the effective execution of a control or used as the basis for our substantive procedures, we determine whether the information generated by an application in the report is reliable for the intended use based on the considerations outlined in this tree.(B) Is the information generated by an IT application appropriate for its intended use?

Before we develop our audit approach, gain an understanding of the intended use of the information generated by an IT application. Understanding the intended use aides us in determining the information in the report that is relevant to the audit. Our testing approach may vary depending on the type of information and intended purpose. For example, it may not always be necessary to assess mathematical accuracy (e.g., if the report does not include extended values, sub‑totals or totals).

(C) Is the information generated by an IT application provided by a third‑party service provider?

Information used by management or the engagement team may be designed and produced by third‑party service providers. Management will need to have controls to monitor such activities as part of the entity’s system of internal control. Outsourcing does not relieve management’s monitoring (e.g., complementary user entity controls) responsibilities. When management’s analyses or conclusions are based on the information from a third‑party service provider, the reliability of such information will also need to be assessed by management as part of its system of internal control.

When planning to rely on an CSAE 3416 report (or equivalent) for information generated at the service organization, including assessing the reliability of the information used by management to perform relevant controls or used by us in performing substantive procedures, determine whether the information is within the scope of the service auditor’s report. If the information is not explicitly identified in the application description of the report, or in the description of testing performed by the service auditor, additional procedures are likely to be necessary if we plan to place reliance on controls that use the information and/or reports generated by the service organization. Additional procedures may include obtaining evidence about the reliability of information from management, the service organization, or the service auditor, and understanding how and which reports were subject to testing as part of the service auditor’s engagement. If we determine the relevant information is not included within the reporting or testing scope of the service auditor’s engagement, determine the extent to which further evidence is necessary to assess the reliability of such information.

The determination as to the nature and extent of further evidence necessary is a matter of professional judgment. Also consider the guidance included in OAG Audit 6040

(D) The information generated by an IT application: (a) is to be used as the basis for a substantive test of details and (b) does not include any attributes that are being relied upon in selecting items for testing (other than where monetary values are used for coverage‑based selection)

When we use information generated by an IT application as a population for our substantive tests of details, the testing objectives over the completeness and accuracy of the information may vary depending on the purpose for which we are using the information.

For example, if we are using the information from a report (e.g., account analysis or transaction listing) to make a non‑statistical sample selection of items to test or to make a targeted testing selection based on high values, we are typically able to test the completeness of the population by:

- Testing the mathematical accuracy of the report, and

- Agreeing the total per the report to the general ledger.

In instances such as these, our substantive testing of the account or transaction information contained in the report can commonly be used/leveraged to provide sufficient audit evidence regarding the accuracy of the underlying information included in the report. In making this determination we consider whether the substantive procedures performed in aggregate (i.e., the completeness procedures described above and the substantive procedures to be performed over accuracy) provide sufficient appropriate audit evidence over the completeness and accuracy of the information.

However, if we are performing targeted testing, our targeted selections may be based on a specific attribute. For example, targeted testing of FOB destination sales in our year‑end cut‑off testing, to determine whether or not shipments were delivered by year‑end. In this example, in addition to the procedures noted in the example above, we would also need to test the accuracy of the specific attributes we are using to target our selections (i.e., FOB destination terms).

Another example is when we intend to use an aged accounts receivable report as the basis for evaluating the adequacy of the allowance of doubtful accounts. We would likely be able to test completeness by agreeing the report total of accounts receivable to the general ledger but we would need to design a separate test of the accuracy of the aging depicted by the system‑generated aging report. Most likely we would agree a selection of invoices on the report to corroborating documents, recalculate the aging and compare it to the aging reflected by the report. For guidance on testing report attributes that are being relied upon in selecting items for testing see guidance in section (E) below.

In some cases the relevant information contained in the report may already have been evaluated as part of other audit procedures performed. For example, in the scenario above, we may be able to rely on effective controls over the order entry process if they include controls over the accuracy of the transaction attribute FOB destination vs. FOB shipping point. In such circumstances we may determine that we do not need to perform substantive testing of the FOB terms.

Where the system‑generated report used in the audit includes attributes not relevant to the test being performed (e.g., customer number included in an aged accounts receivables report) it is not necessary to test the completeness and accuracy of these attributes.

Refer to the illustration in section “Information generated by an IT application—Testing approach—Completeness and accuracy of the source data presented in the report” summarizing the types of testing procedures that may utilize system‑generated reports and whether we would typically test reliability using the approach described above.

(E) Do we expect our testing of the ITGCs and information processing controls related to the application generating the information to be efficient and effective?

The next steps in assessing the procedures needed to address the reliability of information generated by an IT application will vary depending on whether the entity has designed and implemented ITGCs and information processing controls relevant to the source data and application that generated the information and such controls are operating effectively.

In addition we need to consider whether the information used is coming from the application directly or was modified (e.g., downloaded into a spreadsheet and filtered) and therefore additionally consider guidance in OAG Audit 2051.

No ITGCs and information processing controls reliance—Performing substantive testing procedures over the information generated by an IT application

If we do not plan to test ITGCs and application controls, or test them but do not find they are operating effectively, we substantively test the relevant attributes in each instance of the information that we plan to use for the purposes of the audit. For example, if we are testing a monthly control twice throughout the year, then we substantively test the relevant attributes in the underlying information at least for the same two instances of the control.

Considerations for determining the nature of the substantive tests and increasing the frequency of the testing of the underlying information from the minimum include

-

the source and expected reliability of the underlying information (e.g., is it sourced from applications independent of the financial reporting process; is it straightforward or complex; etc);

-

the importance and planned level of assurance from the IT manual dependent control or substantive testing

For substantive testing consider whether it would be effective and efficient to design our audit plan to minimize the number of instances of the report to be used (e.g., obtain one report covering the entire period rather than multiple reports for shorter periods ) for the purpose of our testing. When information generated by an application is being used in the operation of a control, such an approach is not appropriate because the information generated by an application relied upon in the execution of the control needs to be tested for each instance of the control we are testing.

Performing substantive testing of report attributes

The nature of the substantive procedures to be performed will depend upon the attributes within the information that are relevant to how the information is being used in the audit. A common approach in this area is to apply the concepts from OAG Audit 7043 to perform “full/false” inclusion testing as follows:

-

To assess whether the information is accurately reflected on the report, select a sample of items from the report and agree them to the underlying application for specific attributes (such as customer name or invoice number).

-

To assess whether the information in the application is completely reflected on the report, select a sample (a different sample than selected for accuracy) of items from the application and agree them to the report for specific attributes (such as customer name or invoice number).

For example, assume management generates a report of all credit memos greater than $1 million on a monthly basis to review for evidence of authorization. We have assessed the design of this control and determined the remaining population of credit memos below this threshold is not material individually or in the aggregate, and therefore, the $1 million threshold is appropriate. We then perform our own independent testing of the report to test the reliability of the report used by management in executing the control. We could elect to perform full/false accept‑reject testing, as follows:

-

To assess whether the information is accurately reflected on the report, we select a sample of credit memos on the report and agree them back to the source application for specific attributes, such as customer account numbers and dollar values.

-

To assess whether the information in the application is completely reflected on the report, we obtain the list of all credit memos extracted from the application where credit memos are processed, then filter the list of credit memos to those greater than $1 million, and agree a sample of credit memos to the report management uses as part of the monthly control. We also need to perform appropriate testing of completeness of the list of credit memos used in this test (e.g., reconciling totals to the general ledger, sequential numbering verification).

Determining the nature, timing, and extent of evidence needed to assess the reliability of the information generated by an application is highly dependent on individual engagement facts and circumstances, as well as the type of report under assessment (i.e., standard, custom, query). The examples below describe how we might test some commonly used reports. Other reports may be used by the entity and utilized by us, and similar types of testing would be considered for those. Note that this list of procedures is not complete and we need to determine whether additional procedures are needed in order to determine the reliability of the entity's reports.

Accounts receivable aging report

-

Apply accept‑reject testing on the date and amount of individual transactions to supporting documents such as sales invoices and, if appropriate, shipping documents to verify amounts in the accounts receivable report and to verify accuracy of the categorization of invoices into aging categories.

-

Agree the total balance in the report to the amount in the general ledger to check completeness of the report. If there are any differences between the report and general ledger, perform testing on the reconciliation between the report and account ledger.

These procedures allow us to determine whether the aging report is reliable for use in our detailed audit procedures related to the allowance for doubtful accounts. If any additional reports are used by us to audit the calculation of the allowance for doubtful accounts, appropriate testing of that information would also be considered.

(F) Have we previously evaluated the information generated by an IT application presented in the report?

If identified ITGCs are effective during the period since the last time we tested the reliability of the report through the period for which we plan to use the information generated by an application, we assessed the validity of a report in a prior period audit and we are able to verify the report has not changed since we last tested the report, it may be appropriate to conclude that we have sufficient evidence about the reliability of information provided by the report without repeating the specific testing procedures performed in a prior period (see specific considerations in (G) below). We refer to this approach as a benchmarking approach. Normally, if there has been more than three years since our last testing of the report (i.e. “baseline” testing), we would not use a benchmarking approach in the current audit unless the circumstances described in section G1 apply.

(G) Do we intend to apply a benchmarking approach?

A benchmarking approach may be an efficient way to assess the reliability of information generated by an application. However, a benchmarking approach may not be efficient or the most effective strategy for all reports. For example, if we cannot determine the last modified date of a report without extensive analysis and work, it may be more efficient to test the report through other means. If a benchmarking approach is not planned, proceed to section I below.

No direct testing of a report needs to be undertaken in the current year when we are following a benchmarking approach and where we are able to determine that the following elements apply:

-

We do not need to re‑establish the baseline (see section G1 for detailed guidance);

-

The procedures performed in the original baseline period continue to be sufficient for current year requirements (see section G2 for detailed guidance);

-

The ITGCs support the consistent and effective functioning of the information generated by an application, since the baseline was established and through the period for which we plan to use the information (see section G3 for detailed guidance), and

-

The report has not changed since it was previously tested (see section G4 for detailed guidance).

We document our consideration of the above factors when a benchmarking approach is planned.

The same benchmarking considerations discussed in sections F and G may also apply to other types of IT dependencies.

(G1) Do we need to re‑establish the baseline?

Benchmarking involves testing the report in an initial year to obtain evidence about the reliability of those reports and then performing other procedures (i.e. testing of relevant ITGCs and information processing controls, as appropriate) in subsequent years to obtain evidence of continuing reliability of the information generated by an application. Determining when to re‑establish the baseline is a matter of professional judgment. Section G2 summarizes some of the factors we commonly consider in making this judgment.

Normally, we baseline the reports which we intend to use at least every third year. However where there is clear evidence of no changes made to the report and there are no changes in the entity and its environment that may impact the ongoing reliability of the report, the period of time might be extended. In light of the typical changes impacting most entities over time we apply caution in considering whether to extend the benchmarking period.

(G2) Do the procedures performed in the original baseline period continue to be sufficient for current year requirements?

As management’s environment, controls, and reports change over time, we may determine procedures performed in a prior year to establish a baseline are no longer sufficient. A number of factors could be indicative of such a change, including changes in the information in the report we intend to rely on, changes to controls, changes in technology, indications of errors associated with the report, as well as results from our other procedures. As a result, we may consider re‑testing the report (i.e. establishing a new baseline). For example, in prior years a management report that displayed profitability by entity code was assessed for each of the in‑scope entity codes. However, given the growth in several emerging markets, additional entity codes are now in‑scope for the audit and need to be considered as information relevant to our audit procedures. As a result, the testing procedures performed in a prior year may not continue to be sufficient given the change in underlying objectives (i.e., additional entity codes in scope).

We also consider the procedures performed the last time the report was tested (i.e., when the baseline was established) to evaluate whether the procedures meet the current year objective(s) and all applicable scenarios were tested to demonstrate the report functions as intended. In order to evidence the consideration of the prior year assessment we typically include the results of the prior year testing in the current period’s workpapers, as well as documentation of our current period evaluation of the sufficiency of the procedures.

(G3) Do the ITGCs support the consistent and effective functioning of the information generated by an application, since the baseline was established?

After a report has been tested and determined to be accurate and complete (i.e. establishing a baseline), effective ITGCs support the consistent and effective functioning of the information generated by an application. For example:

-

Program change controls may provide evidence that changes to the information or reports are requested, authorized, performed, tested, and implemented and whether access to programs and data is appropriately restricted.

-

Restricted access controls may provide control over which users have the ability to modify reports or data or approve changes to the reports or data.

-

Computer operations controls may provide evidence over the transfer of data into reports or reports generated automatically via a scheduled process or event‑driven activity such as a nightly shipment report or real‑time three‑way match exception report.

If information is not sourced from an application over which ITGC evidence has been obtained, consider adding that application to the ITGC testing scope, or perform other substantive validation procedures to assess the completeness and accuracy of the information each time it is used (e.g., performing substantive testing of the underlying information for all information used in the audit).

The nature and extent of other validation procedures may include considerations such as:

-

It is possible for the risk of unauthorized program or data changes to be sufficiently low to limit the evidence we need about the effectiveness of some or all ITGCs when we are evaluating the consistent and effective operation of the program or routine that generates the information used in a control. For example, when the information comes from a standard system report or from an “off‑the‑shelf” vendor application with no customization, or in situations where there is no source code modifiable by the entity, there may be limited risk that the report can be changed. Therefore, an evaluation of the effectiveness of program change controls may be less important to our evaluation. In contrast, there could be scenarios, such as “off‑the‑shelf” applications provided by a small vendor whose application is not widely used, where our procedures may be similar to the procedures we perform related to a custom in‑house developed application.

-

Other types of evidence might be available that, when considered alone or in combination with some ITGC testing, could provide sufficient evidence about the ongoing consistent and effective operation of the program or routine that generates the information. For example, there may be reliable evidence available to demonstrate the program has not been changed since it was last tested (e.g., a application‑based “last modified date”). An IT audit specialist’s skills and experience can assist when considering such an approach.

(G4) Has the report changed since it was previously tested by us?

Having effective ITGCs alone is not enough to support a benchmarking approach. We need to document the evidence obtained to verify the report has not changed since it was last tested. The nature and extent of the evidence we obtain to verify the report has not changed since our last testing may vary depending on a number of factors, including our evaluation of the strength of the entity's program change controls, the frequency of report changes, and the type of report (standard, custom, or query). Consider whether we have obtained evidence regarding the changes to the report in performing our testing of ITGCs.

For some applications, it is possible to inspect the last change date associated with the report. In these situations, we can identify changes to the report since it was last tested (ensuring that there were no changes to the related query). Changes may impact our planned testing approach based on the nature of the change.

A vendor application patch or upgrade may modify a standard report. Where applicable, we document our understanding of the changes included in a vendor patch or upgrade and its impact (or lack of impact) on standard reports.

For some applications and reports it is not possible to inspect the last change date associated with the application, program, or report. In these situations our procedures may include:

-

Obtaining and comparing the source code of the current version of the report to the source code of the report when tested in the baseline period. An IT audit specialist would typically be involved in evaluating whether there have been any changes to the report source code.

-

Inquiring of the control owner and/or IT owner and then corroborating the information obtained regarding whether any changes have been made to the report. For example, the control owner may indicate a report has not changed since it was previously tested. As part of corroborating this explanation, the engagement team might review the description of changes using key words such as the report name and identify any change tickets associated with the report. Upon a review of the change ticket, if it is determined the header at the top of the report was updated, but the report itself was not modified, it may be appropriate to accept the control owner’s assertion that no substantive changes were made to the report.

We also need to check (either directly, or as part of our ITGC testing) that the reports we want to rely on do not have manual intervention that could result in alterations being made to the report. We also need to document evidence we obtain by observing the creation of the report, including our observation of the entity determining and setting the parameters used to create the report (e.g., the parameters determining the period that the report covers).

As a reminder, inquiry alone is not sufficient to determine that a report has not changed since it was previously tested.

In addition, even when we follow a benchmarking approach it is expected that we check that the report total matches the total shown in the general ledger (or relevant sub ledger), if applicable.

(H) Testing of mathematical accuracy of the information generated by an IT application

There are multiple factors to consider when testing the mathematical accuracy of reports. These factors vary based on the type of report (i.e., standard, custom, or query), as well as the complexity of the underlying calculations being performed by the report. When assessing mathematical accuracy of reports, we generally consider performing the following:

Substantively testing the mathematical accuracy

On engagements where we do not have a basis to rely on standard reports for mathematical accuracy (e.g., for a complex application where we do not plan to test ITGCs), obtain sufficient appropriate audit evidence of the mathematical accuracy of reports through recalculation.

An efficient way to test the mathematical accuracy of large reports such as detailed accounts receivable aged listings may be to obtain the report electronically and use Excel, Access, or other software to recalculate the sub‑totals and/or grand total of the report.

When requesting an electronic report from the entity, it is important to understand the approximate number of records (e.g., transactions, invoices) contained in the report. By understanding the number of records, the appropriate format (Microsoft Excel, Microsoft Access or other) of data can be requested from the entity. Excel is the easiest method for testing mathematical accuracy; however, Excel has a limitation of 1,048,576 rows. Where the report contains more than this number of rows, an IT audit specialist can assist with obtaining data from the entity and testing the mathematical accuracy of reports using Access or other tools.

Where it is not feasible to test the mathematical accuracy of the report electronically, we could select page sub‑totals or customer balance sub‑totals and apply accept‑reject testing (see examples of procedures below), following the guidance in OAG Audit 7043 . As the objective of the test is to check that the report is accurate, we would not tolerate any exceptions. If no errors are found, we may accept the test and conclude that the report is accurately totaled. Alternatively, if a mathematical error is found, we normally reject the accuracy of the report, request that the entity re‑total the report, and then reperform the test.

In the rare circumstances where an effective and efficient approach to testing mathematical accuracy cannot be developed (e.g., due to size and structure of the report) consider discussing alternative testing strategies with an IT audit specialist.

Other testing techniques over mathematical accuracy:

-

When we choose to test programmed procedures for the mathematical accuracy of a report, obtain evidence it is operating as intended in a manner similar to testing any other automated control (e.g., through a combination of testing procedures such as inquiry, reperformance, examination of vendor software manuals, or evaluating the underlying program logic). For further guidance on testing techniques for programmed procedures, refer to the guidance on testing automated controls included in OAG Audit 6054, including use of a benchmarking strategy.

-

For widely distributed off‑the‑shelf packages standard reports may be deemed accurate if we obtain sufficient appropriate audit evidence of the entity’s inability to access or change the applicable vendor source code (e.g., through a combination of specific inquiries with appropriate entity IT personnel, application‑based evidence such as modification date stamps, and/or discussion with an IT audit specialist who have knowledge and experience of the package). Our rationale for reliance on the off‑the‑shelf package and the evidence obtained needs to be documented in our audit file.

(I) Perform procedures to test the reliability of the information generated by an IT application when ITGCs and application controls are determined to be operating effectively

When we expect our testing of ITGCs and information processing controls related to the application generating the information to be efficient and effective, typically it would be sufficient to assess the reliability of the information generated by an IT application only once during the period even if multiple instances of the same information generated by an application are used throughout the audit (for both controls and substantive testing). Testing of the information in those circumstances would include a combination of testing of mathematical accuracy, agreeing totals to the general ledger (if applicable) and one or a combination of the techniques described below. Consider the need to involve an IT audit specialist to determine and execute the most effective and efficient testing approach. Also note that, if the entity inputs parameters each time the report is run, these parameters (e.g., date ranges) need to be assessed each time the report is used.

Test of details of relevant attributes

One of the methods used to validate the reliability of the information generated by an IT application is to perform accept‑reject testing. The detailed approach to this type of test is described in section E above.

Perform an independent code review

When we perform an independent code review, we assess the technical report logic to determine whether the report is generated as intended (e.g., combination or exclusions of entity codes or transaction types). We also perform procedures to obtain evidence that the code subject to review is the same as the code used in the relevant application.

To perform a code review, we need to have sufficient technical ability and knowledge to formulate an independent point of view on the sufficiency of the code to satisfy the intended purpose. This is true both for simple query languages such as SQL, as well as more complex mainframe programming languages, such as COBOL. Even when code is written in a simple query language, the code itself may be complex, affecting the level of knowledge the code reviewer needs to have. We document our assessment of competence in this area including where an IT audit specialist is engaged to perform the review.

When performing a code review, the engagement team would need to consider which elements of the code are important to the information to be used in the audit and develop an independent viewpoint on the appropriateness of the code.

In general, consider performing an annotation (i.e. comments demonstrating an understanding) next to the line of the code to demonstrate our understanding and focus on areas of complexity or the relevant criteria utilized in the report.

For example, as part of substantive testing over payroll, we use management’s report of employees by location including their job title, level, and pay grade when performing disaggregated payroll analytics. The team performs a code review to inspect the underlying SQL used to generate the report. An IT audit specialist with SQL expertise inspects the SQL statements to determine whether the appropriate source data (e.g., human resources and payroll application) is referenced, and whether the code includes statements to generate relevant information (such as location, job title, and pay grade). An IT audit specialist also considers situations where the query excludes selected parameters/information or captures only a selected group of parameters/information. To demonstrate the code review performed by the team, include comments (also known as annotating the code) on the SQL code indicating which statements of code correlate to the data, fields, or calculations being generated in the report. This documentation evidences the results of the code review and the determination that the report is complete and accurate, when included in the workpapers.

Using a data extract, reperform the report/query

Consider whether we have the ability to obtain an extract of the data used in the report. Using our understanding of the report, we can replicate the output by running independent queries on the extracted data or using Excel functionality like pivot tables or sorting and then compare the results to the output on the report.

Continuing with the credit memo report example introduced in section E, we could have elected to assess completeness and accuracy by reperforming the generation of the report. We obtain the full list of all credit memo entries from the application where credit memos are processed for one month. We then filter the month for credit memos greater than $1 million and agree the total number of credit memos, dollar amounts, and other relevant information to the report used by management in their control. We are able to recreate the monthly report used by management and assess the reliability of the information used in the control.

This option may be more beneficial for less complex reports. As reports get more complex, the level of effort required to recreate them may increase.

Independently test using an Integrated Testing Facility

Applying the concepts from OAG Audit 6054, we may consider establishing an integrated testing facility on the entity’s application. Testing in an integrated testing facility includes running sample transactions through the application program or routine and comparing the output to expected results.

Using an integrated testing facility can be an effective approach when a number of reports are generated from the same application and data can be used to generate and test multiple reports. For example, with respect to investment activity, investment transactions themselves can be used to test income reports, holdings reports, purchase and sales reports, and other investment schedules.

After designing a series of test transactions that meet certain criteria, the team processes them and then inspects the report(s) for the appropriate inclusion or exclusion of the test transactions they created. Continuing with the credit memo report example from above, the team would process two credit memos, one for greater than $1 million and one for less than $1 million. The team would expect the credit memo greater than $1 million to be included on the report, and the credit memo for less than $1 million to be excluded from the report.

In some instances, the entity may request this testing be performed in a test environment. When performing an assessment in the test environment, consider performing procedures to determine whether the functionality being evaluated in the testing or replicated environment operates in the same manner as in the production environment.

For further clarity, when assessing control deficiencies related to ITGCs when placing reliance on IT Dependent controls and system reports, the engagement team should perform a risk assessment on the ITGC deficiency before assessing the impact on the audit strategy (i.e. testing compensating controls and/or performing additional substantive testing). The risk assessment should consider:

-

how the ITGC deficiency could be exploited,

-

whether the persons within the entity that have access to exploit the control have incentive to do so, and

-

the opportunity for the control to be exploited.

This risk assessment will help provide a basis for assessing the impact on the audit strategy.

OAG Guidance

Testing Security with ITGC Evidence

If access rights have been determined to be relevant during our risk assessment and ITGCs have been tested, sufficient controls typically exist within the Access to Programs and Data domain. Refer to OAG Audit 5035.2 for details.

Alternative Procedures for Testing Security

Where ITGCs for the access to programs and data domain are not tested and where we plan to rely on controls dependent on adequate segregation of duties, alternative procedures can be applied to test whether access rights are appropriate. Such tests can include obtaining an access listing to relevant functions and performing inquiry and inspection procedures to validate access rights do not present an unmitigated segregation of duties risk. As with the alternative approaches in other IT dependent areas, these procedures are considered at various times throughout the period to conclude access is appropriate throughout the audit period. Segregation of duties risk may also be managed through manual controls. We consider both automated and manual controls when assessing the risk and determining our audit response, if any. For the use of alternative procedures for testing security, consult with an IT audit specialist at the planning phase.

OAG Guidance

Testing of Interfaces with ITGC Evidence

Interface controls verify the completeness and accuracy of data transferred between applications, as well as the validity of the data received. Interface controls can be manual or automated as the data transfer may be manually initiated and the value / record count may be reconciled manually or through application batch totals. The following are some examples of the automated information processing controls that may be used to determine data transmission is transferred from the source system, and received by the destination application in a complete and accurate manner:

-

Edit checks used by destination applications to restrict receipt of interface data from only certain, predefined, source applications. This may be achieved through application recognition codes (e.g., IP addresses and database pointers). Edit checks may also ensure the validity of the data received.

-

Edit checks built into the destination application to disallow the acceptance of files that are not for the correct date, e.g., the application will not accept yesterday’s file a second time.

-

Edit checks built into data transmission to ensure the completeness and accuracy of the transmission. For example, the destination application can calculate control totals and record counts of data received from valid sources. The destination application compares the totals and counts back to the information sent from the source application. The source application compares the control totals against its own calculated information. If the totals are the same, the source application will send a confirmation back to the destination application. If the totals are not the same, the destination application will not process the data.

-

Edit checks using file headers/footers. File headers can summarize the information that is included in the files sent from source applications. The information can include, number of records, total amount fields, total debits, total credits, file sizes, etc. The destination application imports the file on a record‑for‑record basis individually computing edit check calculations. When the calculated information matches the header or footer, the information will go to the next stage of the validation process.

When the information processing controls around interfaces detect differences, consider the controls in place to resubmit the data as corrected.

Data received by source applications may be subject to validation controls or edit checks. For example, if the entity has established business rules for numbering formats, edit checks could be used to validate the consistency of the data received with the established rules.

If we have concluded that relevant ITGCs addressing risks arising from the use of IT for the interfaces are operating effectively, interface controls may need to be tested less frequently than if ITGCs are not relied upon.

Alternative Procedures for Testing Interfaces

Where ITGCs around computer operations are not tested and data interfaces are relevant to our controls or substantive testing approach, alternative approaches can be applied to determine if data interfaces are effective, i.e., data has been transmitted completely and accurately. An entity’s reconciliation of two applications, if designed appropriately, detects any incomplete or inaccurate data sent via interface. Consider the level of precision in the control (e.g., thresholds used in the reconciliation) and the data being reconciled when using this technique. If reconciliations of data are not performed cumulatively or for a balance sheet account, consider testing of reconciliations throughout the audit period to validate the completeness and accuracy of the data flow.