COPYRIGHT NOTICE — This document is intended for internal use. It cannot be distributed to or reproduced by third parties without prior written permission from the Copyright Coordinator for the Office of the Auditor General of Canada. This includes email, fax, mail and hand delivery, or use of any other method of distribution or reproduction. CPA Canada Handbook sections and excerpts are reproduced herein for your non-commercial use with the permission of The Chartered Professional Accountants of Canada (“CPA Canada”). These may not be modified, copied or distributed in any form as this would infringe CPA Canada’s copyright. Reproduced, with permission, from the CPA Canada Handbook, The Chartered Professional Accountants of Canada, Toronto, Canada.

3073 Direction, Supervision and Review Considerations When Using Technology Solutions

Dec-2023

Overview

This topic explains:

- Direction, supervision and review when using technology solutions

- Additional direction, supervision and review considerations when using a visualization

- Direction, supervision and review considerations when using an approved tool

OAG Guidance

When using technology solutions in the audit, there are two areas of focus to be considered:

-

Documentation Standards—The sufficiency of audit documentation included within the working papers to meet CAS 230 requirements; and

-

Direction, supervision and review—The documentation needed to assist the reviewer in meeting their supervision and review responsibilities in the normal course of the audit.

Directing the engagement team on the use of technology solutions

When the use of a technology solution is planned, the team manager provides appropriate direction to the engagement team member(s) who will be using the technology solution, including discussing matters such as:

-

The suitability of using the technology solution for a particular audit procedure;

-

The specific nature of the procedure or activity to be performed by the technology solution and how this will contribute to the audit procedure to which it relates;

-

An understanding of the relevant capabilities/functionalities of the technology solution and determining that the engagement team members possess a sufficient level of proficiency in using the technology solution;

-

Whether the technology solution is an approved tool;

-

The specific responsibilities of the engagement team when using technology solutions (e.g., testing inputs, assessing reliability of the solution by understanding the coding or logic, and testing the completeness and accuracy of the output); and

-

The need to utilize accompanying guidance and tools (e.g., procedures) to document their use of the technology solution.

Supervision and review when using technology solutions

The reviewer is responsible for reviewing the output from a technology solution in the context of the audit procedures being performed, as well as reviewing any inputs, including checking that the correct source data and parameters (e.g., time period) were used. This also includes reviewing procedures performed over the completeness and accuracy of the source data. The reviewer is also responsible for understanding the objective of the procedures and how that objective is supported by the technology. How a reviewer accomplishes these responsibilities may vary based on how the technology is used in the audit, the complexity of the technology and the underlying evidence available.

For example, when Excel is used to perform a substantive analytic of interest expense, the reviewer may review the formulae in the Excel workbook maintained in the working papers or may recalculate a few of the key formulae independently either within Excel, another technology or with a calculator. When an IDEA project, or Robotic Process Automations (RPA) solution is developed to perform the same substantive analytic, the reviewer may open the workflow in the IDEA Script or project in IDEA or the automation in the RPA software and walk through the workflow with the preparer. If the preparer has included text boxes explaining each function, the reviewer may be able to review that documentation to understand the functions performed. They may re-perform some of the functions independently, either in IDEA, Excel or with a calculator.

The same concepts apply when reviewing a visualization. For example, when reviewing a bar chart in IDEA reflecting the dollar value of journal entries posted by person, with the Y axis being the dollar value and the X axis being the people, the reviewer may open the visualization file and click on the bars for each person, which will display to the reviewer the number of entries posted by person and the exact dollar amount, allowing the reviewer to agree a selection of items back to the source data.

The reviewer is not evaluating whether the technology performed its underlying functionality correctly (i.e., we are not testing whether the coding or logic of the "off-the-shelf" technology, such as IDEA, performs core function such as summations or filtering appropriately); rather, the consideration is how the engagement team member applied the functionality, that it was appropriate to achieve the intended objective, and that it was applied to the appropriate population. For example, if a team creates a pivot table in Excel to summarize the different revenue streams subject to testing, the reviewer would likely check whether: (1) the correct filters were applied in the pivot, (2) the appropriate function was used (i.e., summed or averaged the appropriate amounts) and (3) whether the entire population was included in the pivot. The same considerations would be relevant when a similar function is performed in IDEA or another technology.

When reviewing a more complex technology solution, the reviewer may rely on a review of the supporting documents created during the development process, such as a design document and a test plan and results, to gain evidence over the proper operation and application of the technology. For example, assume an engagement team performs access control testing 15 different times within the context of one engagement. The engagement team decides to develop a bot using RPA technology to test whether access for terminated employees was removed timely. Data Analytics and Research Methods team defines the objectives of the test, programs the bot and performs testing on the bot to validate it works as intended. The Data Analytics and Research Methods team would run the bot for each population (in this instance 15 times). In this scenario, the strategy, design, testing and results of testing documentation for the bot would be retained centrally in the audit file and referenced in the working papers where the bot was run. In performing the review of the testing performed, the engagement team reviewer would understand the objectives and functions of the RPA and the testing performed to validate the bot was functioning as intended.

When supervising and reviewing the use of a technology solution by a specialist in accounting or auditing also refer to OAG Audit 3101.

Additional supervision and review considerations when using a visualization

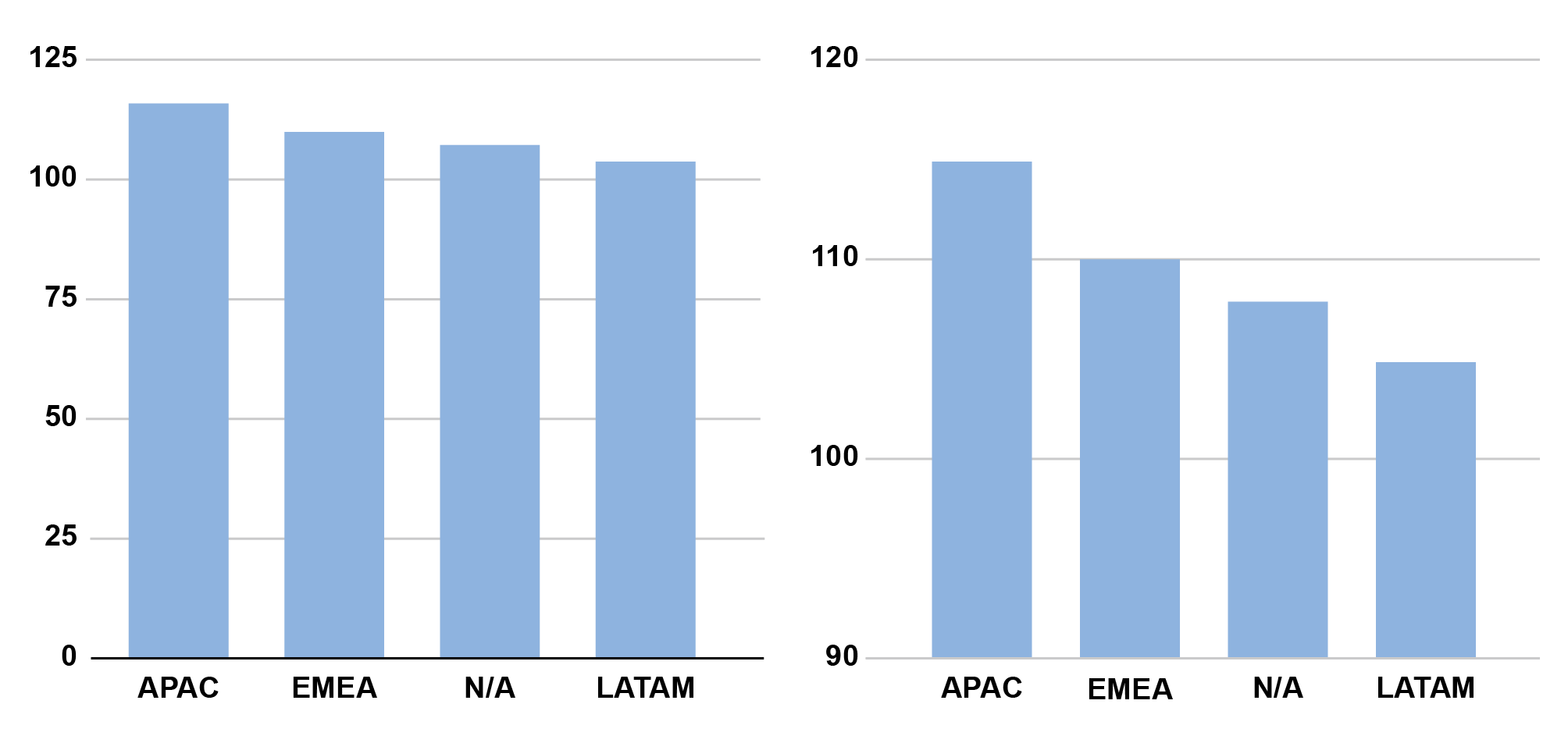

Design visualizations in a way that effectively communicates the intended information and message. For example, a user’s interpretation of a graphic can be impacted by the scaling of the vertical or horizontal axis. The relative lengths of bars in a bar chart may be used to indicate the significance of differences in variables. However, the perception of significance can be impacted by the scale of the axes, and other aspects of the graphic. If a graphic is improperly designed, it might result in us failing to identify an important matter that requires additional focus. It might also lead us to identify a matter for follow-up when no further work is warranted. As an example, the two graphics below give a significantly different impression of the variation in sales between regions, even though they were both created using the same data.

The reviewer of a visualization considers whether the graphic has been designed in such a way that makes it difficult to understand, presents an incomplete or inaccurate picture of the audit risk being addressed or inaccurately portrays a topic by using the wrong criteria (e.g., inappropriate data used to define the x and/ or y axis).

The filters that are applied when creating a visualization in a software tool such as IDEA can also change how a user is likely to interpret the data depicted in the visualization. Therefore, a reviewer also needs to understand and assess the appropriateness of how filters are being used in the visualization.

Supervision and review considerations when using an approved tool

In cases when the engagement team is using a technology solution approved for use by the Office, the reviewer is still responsible for understanding the scope and objectives of the use of the tool on the engagement, assessing the suitability of the tool for audit procedure in which it is being used, reviewing the output in the context of the audit procedure being performed, and reviewing the appropriateness and reliability of any inputs into the tool. However, in accordance with OAG Audit 3072 concerning responsibilities when an engagement team uses an approved software tool, the engagement team is not responsible for testing, documenting the reliability of the tool itself (including its coding and logic) nor the completeness and accuracy of the tool output. The engagement team is, however, responsible for performing audit procedures on the output, including taking any follow-up actions that may be necessary as a result of performing the procedure.

For example, when Data Analytics and Research Methods specialists utilize an approved tool to evaluate configurable settings, automated controls, user access and the associated segregation of duties conflicts for a specific ERP application for an assurance engagement, it is the engagement team’s responsibility to understand and document the scope and purpose of the work performed, such as what ERP environments and company codes are subject to the tool, what audit procedures the use of the tool is supporting and whether the tool is suitable for such procedures, as well as the performing audit procedures on the outputs, such as if any users had been assigned a superuser attribute. However, the reliability of the function and the completeness and accuracy of outputs of the tool will have been validated as part of the Office’s approval process and would not be the responsibility of the engagement team.