Performance Audit Manual

COPYRIGHT NOTICE — This document is intended for internal use. It cannot be distributed to or reproduced by third parties without prior written permission from the Copyright Coordinator for the Office of the Auditor General of Canada. This includes email, fax, mail and hand delivery, or use of any other method of distribution or reproduction. CPA Canada Handbook sections and excerpts are reproduced herein for your non-commercial use with the permission of The Chartered Professional Accountants of Canada (“CPA Canada”). These may not be modified, copied or distributed in any form as this would infringe CPA Canada’s copyright. Reproduced, with permission, from the CPA Canada Handbook, The Chartered Professional Accountants of Canada, Toronto, Canada.

6020 Assessing the Reliability of Data

May-2024

Overview

To obtain sufficient and appropriate audit evidence, engagement teams often use data to perform audit procedures and to support audit findings and conclusions. When using data as inputs to audit procedures or to support audit findings and conclusions, engagement teams must obtain sufficient and appropriate evidence that the data is reliable, meaning that it is accurate and complete. This manual section focuses on assessing the reliability of data.

OAG Policy

When using data as input to perform audit procedures or to support audit findings and conclusions, the engagement teams shall evaluate whether the data is reliable for its intended purpose, including obtaining audit evidence about its completeness and accuracy. [May-2024]

OAG Guidance

What CSAE 3001 says about data

CSAE 3001 requires the consideration of the relevance and reliability of the information to be used as evidence and requires that sufficient and appropriate evidence be obtained in forming the audit conclusion. OAG Audit 1051 Sufficient appropriate audit evidence provides guidance on sufficiency and appropriateness of audit evidence.

Why it is important

The nature and complexity of information systems in which data is recorded, stored, transformed, and extracted do not guarantee data reliability because errors can still be introduced by people or processes. The practitioner must evaluate whether the data is sufficiently reliable for their purposes. Unreliable data may lead to unreliable findings and conclusions.

Definitions

What data is

Data is a digital representation of information. Data is a collection of discrete or continuous values describing quality, quantity, facts, statistics, or other parameters of information. Data can exist in multiple forms, including source data and system-generated outputs.

Source data

Source data, for purposes of this guidance, is data that has been obtained by the practitioner in the form that it is stored in rather than in the form of how it may be presented, used, or reported by end users or user interfaces. Source data includes raw data. A primary example is one or more data tables from databases. Source data is typically taken from the data’s primary storage location whether it be a dataset, database, data warehouse, data mart, a spreadsheet, or any other data repository. Source data may be obtained from data held by the audited entity or obtained from third parties.

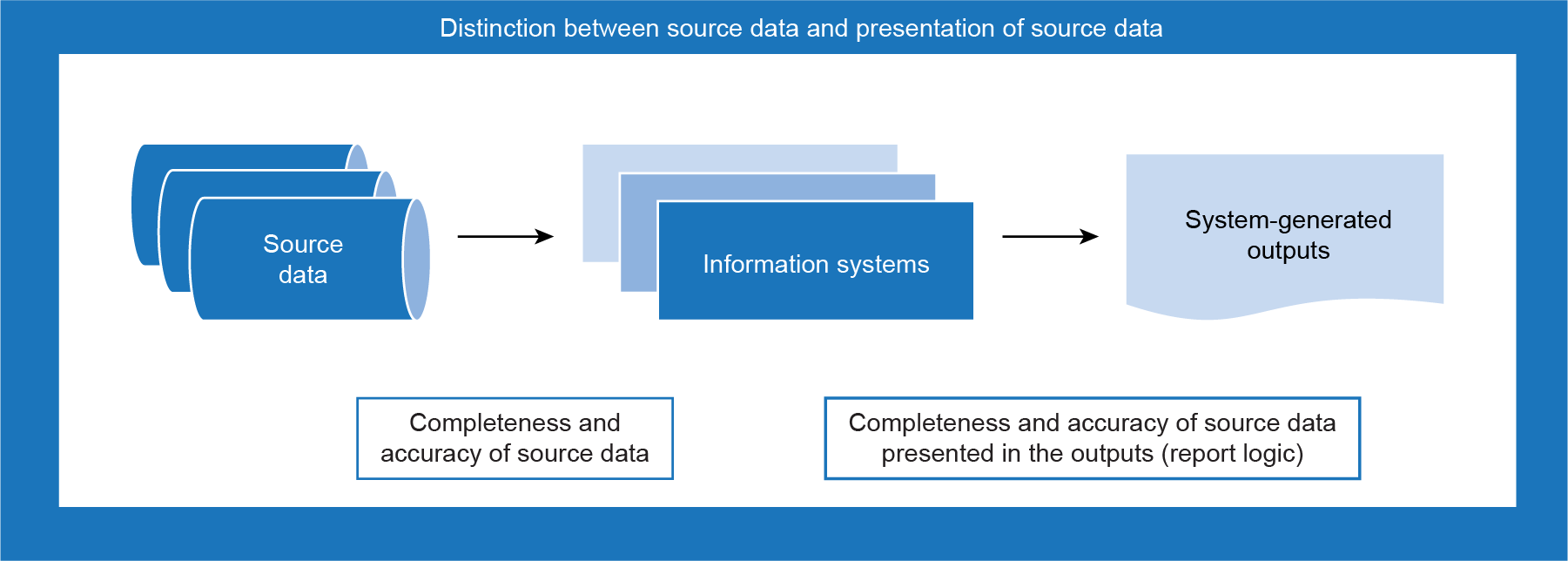

System-generated outputs

System-generated outputs, for purposes of this guidance, are data that information systems have produced by compiling, aggregating, summarizing, filtering, or otherwise transforming source data (Exhibit 1). Examples include

- standard outputs from off-the-shelf packages (outputs from software purchased from a third-party vendor)

- outputs routinely generated by the entity’s programmed or configured systems

- outputs generated through end-user computing tools, such as Microsoft Excel, Microsoft Access, or similar products

- outputs generated by third-party information systems

System-generated outputs can be in the form of a report, a spreadsheet (like Excel), or a text file (like a comma-separated value file).

Exhibit 1—Relationship between source data and system-generated outputs

How data can be used a in direct engagement

Data can be used in multiple ways, including, but not limited to, the following:

- in risk assessment procedures

- to identify the population of items from which a sample will be selected for further testing

- as an input to perform analytical procedures

- as contextual information in the assurance report

- as evidence to support audit findings and conclusions in the assurance report

Collecting information

To assess the risk of data not being reliable, engagement teams should have a good understanding of how the data is collected, the systems it is extracted from, and the relevant controls over data.

This will help engagement teams to understand the data, its nature, and the internal controls over data that are key inputs in evaluating the risk of data being unreliable and determining the best assessment strategy.

There are many ways of assessing reliability.

The following are examples of questions that engagement teams could ask to assess data and to understand the processes to generate it. The responses to these questions can help to determine risk areas and therefore anticipate issues regarding the reliability of data.

Examples of questions to consider

- How is your data maintained (database systems, Microsoft Excel or Word files, paper)?

- Is your data generated using an automated process, or is it entered manually?

- At what level (transaction, individual, or program) is the information collected, or has it been aggregated?

- How would you obtain assurance on the accuracy and completeness of your data?

- Have your data sources been otherwise assessed for measures of data quality?

- How would you prevent the improper access to your data?

- Do you have controls on the entry, modification, and deletion of your computerized data?

- For what purposes is your data used (for example, tracking, reporting)?

- Is there an oversight function for the data sources in your organization that ensures that proper data management practices are maintained?

- What impact or role do your data sources have with regard to influencing legislation, policy, or programs?

- Do your data sources capture information that is likely to be considered sensitive or controversial?

- What is your data’s classification level (unclassified, Protected A, and so on)?

- With regard to your data, what documentation can you provide and how is it maintained (data dictionary, details on data controls, and so on)?

There are many reports or documents that engagement teams may request and review to help in acquiring a good understanding of data.

Examples of documents to consider

- documentation on relevant information systems and processes

- documentation on data quality procedures

- relevant user manuals

- relevant internal audit reports

Understanding data relevance and reliability

The relevance of data refers to the extent to which evidence has a logical relationship with, and importance to, the issue being addressed.

The reliability of data means that data is complete and accurate as follows:

- Completeness of the data refers to the extent to which all transactions that occurred are inputs into the system, are accepted for processing, are processes once and only once by the system, and are properly included in the outputs.

- Accuracy of the data refers to the extent that recorded data reflects the actual underlying information (for example, amounts, dates, or other facts are consistent with the original inputs entered at the source). There are other components of accuracy to be considered when the data are system generated outputs (for example, accuracy of a financial report may include the accuracy of the calculations—that is, mathematical accuracy).

Accuracy and completeness need to be assessed only if data is deemed to be relevant by engagement teams. This is why accuracy and completeness are the main attributes to consider for assessment.

Data reliability versus data integrity

Data reliability and data integrity are 2 different concepts. Data integrity refers to the accuracy with which data has been extracted from the information system (source), transferred to engagement teams, and transformed into an appropriate format for engagement teams to use. This distinction is important as data can have integrity but not reliability (for example insufficient evidence has been obtained over the accuracy and completeness of information). Therefore, obtaining assurance only over integrity is not sufficient for engagement teams to conclude that data is sufficiently reliable.

Factors affecting the reliability of data

The reliability of data is mainly influenced by the following factors (Exhibit 2):

- Source of data: Different data sources will require different approaches to evaluating reliability. Data can be from an internal or external source, and data can be integrated from a variety of sources.

- Nature of the data: The nature of data, such as complex data, sensitive data, or classified data, will require different approaches to evaluating reliability.

- Internal controls over data: These controls ensure that data contains all of the data elements and records needed for the engagement and reflects the data entered at the source or, if available, in the source documents.

Exhibit 2—Descriptions and examples of factors affecting the reliability of data

| Factors | Description | Examples |

|---|---|---|

|

Source of data |

External source While recognizing that exceptions may exist, data from credible third parties is usually more reliable than data generated within the audited organization. External information sources may include academics, researchers, and parliamentary committees. Circumstances may exist that could affect the reliability of data from an external source. For example, information obtained from an independent external source may not be reliable if the source is not knowledgeable or if biases exist. Internal source Internal data refers to the private data collected within the organization. For example, engagement teams may intend to make use of the entity’s performance reports generated from its information system. Internal reports may be produced from different types of systems, and the information can be obtained from a variety of sources. |

Examples of data that may be obtained from external data sources include

Examples of data that may be obtained from internal data sources include

|

|

Nature of the data |

The nature of data is also an important factor to consider. Data could be simple, highly complex, sensitive, or classified as secret. |

When data is extremely complex, engagement teams may require the use of an expert to evaluate the reliability. |

|

Internal controls over data |

The reliability of data is influenced by the controls that data contains all of the data elements and records needed for the engagement and reflects the data entered at the soure or, if available, in the source documents. Controls over data can be manual, automated, or IT-dependent manual controls. |

For example, an IT-dependent manual control may exist where data entered into the system requires approval by an authorized individual before being processed. |

The factors affecting the reliability of data will be considered by engagement teams to assess the risk that data is not reliable.

Determining the extent of the reliability assessment

Engagement teams should consider the elements below when determining the extent of the assessment:

- the purpose for which the data is used

- the significance of data as evidence

- the risks that data is not reliable

There is no specific formula or recipe to precisely determine the extent of work to assess the reliability of data. Engagement teams need to consider and document the factors listed above and conclude on the basis of their professional judgment.

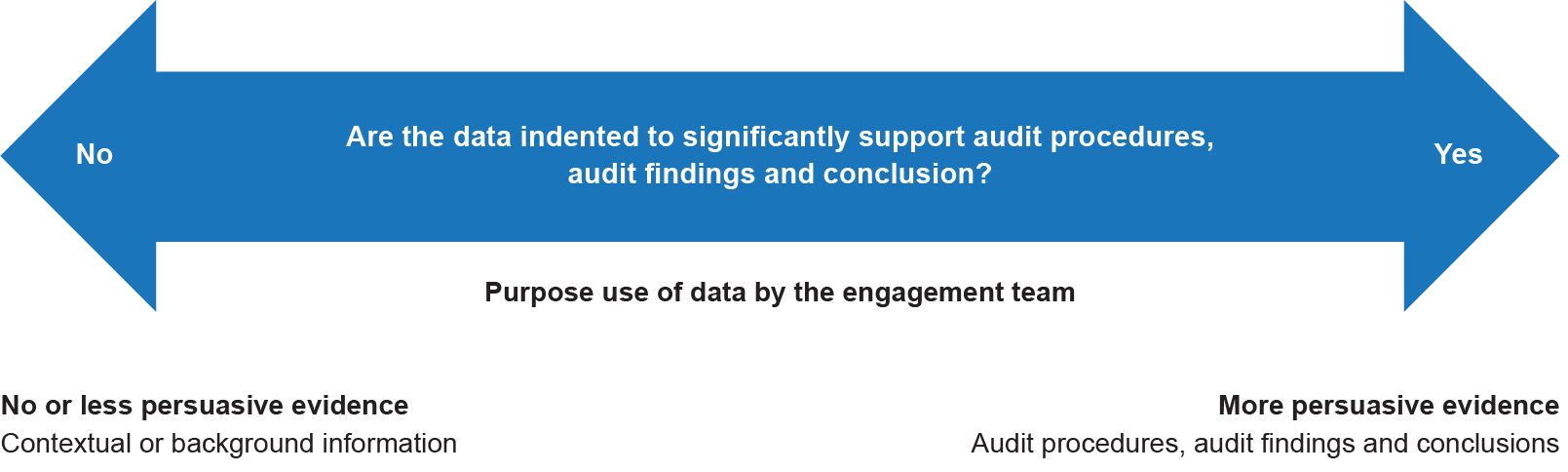

The purpose for which data is used

The purpose for which the data is to be used will affect the level of effort and the nature of audit evidence required (Exhibit 3).

Exhibit 3—Purpose for using data in direct engagements

Engagement teams need to devote considerably more effort when assessing the reliability of data to be used as inputs to audit procedures or to support audit findings and conclusions compared with data to be used as contextual or background information.

Audit procedures, audit findings, and conclusions

In accordance with OAG policy, when using data as an input to perform audit procedures or to support audit findings and conclusions, engagement teams shall evaluate whether the data is reliable for their intended purpose, including obtaining audit evidence about its completeness and accuracy. A reliability assessment is required when data is used for those 3 specific purposes.

Contextual or background information

Background information generally sets the tone for reporting the engagement results or provides information that puts the results in proper context. Data used in contextual or background information in the audit report is typically not evidence supporting the assurance conclusion.

Level of risk of using non-reliable data

Using non-reliable data would likely weaken the analysis and lead engagement teams to wrong audit findings or conclusions. The level of risk of data not being reliable should affect the nature and extent of testing required. Engagement teams should consider the factors affecting the data reliability to assess the risk of data not being reliable. As mentioned previously, these factors are the source of data, the nature of the data, and internal controls over data (Exhibit 4).

Exhibit 4—Factors affecting the reliability of data

| Factor | Generally more reliable Lower reliability risk |

Generally less reliable Higher reliability risk |

|---|---|---|

|

Source of data |

Independent third party |

Entity |

|

Nature of the data |

Simple data |

Highly complex |

|

Internal controls over data |

Existent related internal controls |

Ineffective or inexistent related |

Procedures to assess the reliability of data

Engagement teams may use a variety of procedures to assess the reliability of data. The desired level of evidence can be obtained through tests of controls, substantive procedures, or a mix of both. On the basis of the level of assessed risk, engagement teams need to determine the most efficient approach to obtain the desired level of evidence.

Control testing

Control testing can be an excellent source of evidence. Engagement teams need to obtain an understanding of the internal controls, including the controls and processes of the information systems where data is maintained. Engagement teams will need to identify which controls are the most relevant for data reliability and assess whether the relevant controls have been designed and implemented appropriately. It is only after concluding on the design and implementation of the relevant controls that engagement teams can consider testing the effectiveness of those controls.

Manual and automated controls can both provide assurance over the reliability of data. However, since data is usually created, processed, stored, and maintained using information systems, engagement teams may have to consider testing the automated controls. Testing the operating effectiveness of the IT general controls (ITGCs) and application controls over the information systems in which the data is stored and maintained may be an effective and efficient approach to obtaining audit evidence over the completeness and accuracy of the data (Exhibit 5).

Exhibit 5—Controls that can provide assurance over the reliability of data

| Control type | Description |

|---|---|

| Automated controls | Controls performed by IT applications or enforced by application security parameters. |

|

IT general controls (ITGCs) |

Policies and procedures that apply to all the entity’s IT processes that support the continued proper operation of the IT environment, including the continued effective functioning of information processing controls and the integrity of information (that is, the completeness, accuracy, and validity of information) in the entity’s information system. |

|

Application controls |

Application controls pertain to the scope of individual business processes or application systems and include controls within an application around input, processing, and output. The objective is to ensure that

|

An example of an application control is an input control. For example, in this scenario, companies are required to submit laboratory results electronically, showing the level of harmful substances released into the air and waterways, on a weekly basis. Input controls can restrict the fields that can be edited or uploaded electronically into the database. In this case, the system would generate the date when the company uploaded its laboratory results. Companies could not edit the date field. The engagement team could test the restrictions on electronic submissions to validate the input controls, ensuring data integrity. Further testing would be required to ensure the reliability of the data as described below.

Understanding the nature and source of the data will be important in determining whether ITGCs or application controls are relevant in aiding the auditor in gathering audit evidence over the data. Engagement teams may consult the IT audit specialist for the assessment of relevant ITGCs and application controls (also called information processing controls).

The Annual Audit Manual also includes useful information in section OAG Audit 5035.2 Identify the risks arising from the use of IT and the related ITGCs, and in section OAG Audit 5035.4 Information processing objectives. While not directly applicable to direct engagements, engagement teams can consult those sections to deepen their knowledge and understanding of the relevance of ITGCs or application controls in the engagement.

Spreadsheet—Control considerations

Spreadsheets can be easily changed and may lack certain control activities, which results in an increased inherent risk and error, such as the following:

- input errors (errors that arise from flawed data entry, inaccurate referencing, or other simple cut and paste functions)

- logic errors (errors in which inappropriate formulas are created and generate improper results)

- interface errors (errors that arise from importing data from or exporting data to other systems )

- other errors (errors that include inappropriate definitions of cell ranges, inappropriately referenced cells, or improperly linked spreadsheets)

Spreadsheet controls may include one or more of the following:

- ITGC-like controls over the spreadsheet

- controls embedded within the spreadsheet (similar to an automated application control)

- manual controls around the data input and output of the spreadsheet

It is likely that the entity will not have implemented many, or any, ITGC-like controls over its spreadsheets that can be tested. In some cases, entities implement manual controls over their spreadsheets. As a result, engagement teams should focus on those manual controls over the data input into the spreadsheet and the output calculated by the spreadsheet.

Substantive testing

Engagement teams may use various substantive tests, as they can be an efficient and effective way to test the reliability of system-generated outputs. Substantive tests may include procedures such as tracing the outputs items to source documents or reconciling the outputs to independent, reliable sources.

Obtaining audit evidence over the completeness and accuracy of data can also involve tracing (such as individual facts, transactions, or events to and from source documents). This will help engagement teams to determine whether the data accurately and completely reflects these documents. Testing for completeness involves tracing from source documents to the systems or applications in which data is stored, and testing for accuracy involves tracing from the systems or applications back to source documents.

On the basis of the desired level of assurance sought, engagement teams may use substantive analytical procedures, accept-reject testing, or purposeful sampling to select a sample of data records to be traced to and from source documents (Exhibit 6).

Exhibit 6—Substantive tests to test the reliability of outputs

| Type of substantive test | Description |

|---|---|

|

Substantive analytical procedures |

Substantive analytics evaluates information through the analysis of plausible relationships among both financial and non-financial data. Analytical procedures also encompass a necessary investigation of identified fluctuations or relationships that are inconsistent with other relevant information or that differ from an engagement team’s expectation by a significant gap. When using analytical procedures to test the reliability of data, engagement teams should consider using information that has already been audited or using third-party information. When engagement teams have no choice but to use unaudited data to build their expectations or to analyze a plausible relationship, the reliability of that data must be assessed before placing reliance on it. The Annual Audit Manual includes useful information in section OAG Audit 7030 Substantive analytics. Engagement teams may consult this section to get more detailed information on substantive analytical procedures that might be relevant for their analyses. |

|

Accept-reject testing |

The objective of accept-reject testing, also referred to as attribute testing, is to gather sufficient evidence to either accept or reject a characteristic of interest. It does not involve the projection of a monetary misstatement in an account or population; therefore, accept-reject testing is used only when engagement teams are interested in a particular attribute or characteristic and not a monetary balance. When testing the underlying data of a report, engagement teams can apply accept-reject testing specifically to test the accuracy and completeness of the data included in the report. If more than the tolerable number of exceptions are identified, the test is rejected as not providing the desired evidence. The Annual Audit Manual also includes useful information in section OAG Audit 7043 Accept-reject testing, and in section OAG Audit 7043.1 A five step approach to performing accept-reject testing. While not directly applicable to direct engagements, engagement teams can consult those sections to deepen their knowledge and understanding of the accept reject testing and the 5-step approach to perform this type of test in their engagements. The accept-reject testing covers the accuracy and completeness of the data as follows:

|

|

Purposeful testing |

Purposeful testing involves selecting items to be tested on the basis of a particular characteristic. This is a preferred approach used by engagement teams, as it provides the opportunity to exercise judgment over which items to test. Purposeful testing can be applied to either a specific part of the data or the whole data. The results from purposeful testing are not projected to the untested items in a population. For more detailed information on purposeful selection, engagement teams can consult OAG Audit 6040 Selection of Items for Review. |

Use technology to perform testing

Using technology to verify the completeness and accuracy of complex or large volumes of system-generated outputs can be an efficient audit approach. To interrogate data, computer-based tools such as IDEA or PowerBI may be used to conduct tests that cannot be done manually. Such testing is generally used to validate the accuracy and completeness of processing by the information systems that generated the data.

The following are examples of substantive tests that can be done using technology:

- finding and following up on anomalies such as outliers associated with a specific geographic location

- replicating the system-generated output by running your own independent queries or programs on the actual source data (that is, reperformance of the report)

- evaluating the logic of the programmed system-generated output or ad hoc query, by perhaps

- inspecting application system configurations

- inspecting vendor system documentation

- interviewing program developers (usually not enough by itself)

Engagement teams will still need to test the reliability of the source data included in the system-generated output.

A variety of programming skills are needed, depending on the scope of what will be tested—from producing cross-tabulations on related data elements to duplicating sophisticated automated processes using more advanced programming techniques. Consult with internal specialists as needed.

Data integrity checks

The integrity of data refers to the accuracy with which data has been extracted from the information system (source), transferred to engagement teams, and transformed into an appropriate format for engagement teams to use. Data integrity checks alone are not sufficient to assess the reliability of data.

Data integrity checks include checking for

- out-of-range values, such as dates outside of scope

- invalid values

- the total number of records provided against record count

- the total amount of a field provided against control totals

- gaps in fields or missing data, such as missing dates, locations, regions, identification numbers, registration numbers, or other

- missing values

- duplicate records

Other data integrity checks include

- looking for unexpected aspects of the data—for example, extremely high values associated with a certain geographic location

- testing relationships between data elements, such as whether data elements correctly follow a skip pattern from a questionnaire

- ensuring consistency with other source information (such as public or internal reports)

Data used by a practitioner’s expert or entity’s expert

Where the work of an expert is relevant to the context of an engagement, the reliability of data provided to the expert should be evaluated. Where a practitioner’s expert has been engaged, the practitioner should be directly involved in and knowledgeable about the data and other information provided to the expert. Where the entity engages an expert directly, the data and other information necessary for the expert to perform the work will often be provided without the practitioner’s direct involvement or knowledge. As such, when using the work of an entity’s expert, engagement teams should perform additional procedures to identify and assess the data.

For example, where an engagement team plans to use the work of an actuary engaged by the entity, the engagement team should ask management and the actuary to identify all relevant data and information used by the expert and, where appropriate, test the underlying completeness and accuracy of such data and information. For example, census data used by an actuary are typically extracted from the entity’s payroll or personnel information systems; therefore, testing the completeness and accuracy of the data is necessary to evaluate the reliability of the source data.

For more information on the use of experts, refer to OAG Audit 2070 Use of experts.

Outcomes of the assessment and documentation

Outcomes of the assessment

After the assessment of data reliability, engagement teams need to analyze the result of the work performed and conclude on whether data is reliable or not. The result of the assessment may provide engagement teams with assurance that data is deemed to be reliable or unreliable

Data is reliable when engagement teams obtain reasonable assurance on the completeness and accuracy of data, and are willing to accept the extent of errors (errors are present in the data) and the risk associated with using the data. The greater the number of significant errors in key data elements, the higher the likelihood of data being unreliable and useless for the purposes of engagement teams.

Documentation

The audit logic matrix for the engagement should briefly address how an engagement team plans to assess the reliability of data, as well as any limitations that might be in place because of errors in the data. All work performed as part of the data reliability assessment should be documented and included in the engagement workpapers. This includes all testing, information reviews, and interviews related to data reliability. In addition, decisions made during the assessment, including the final assessment of whether the data is sufficiently reliable for the purposes of the engagement, should be summarized and included with the workpapers.